flink chunjun跨集群hive同步

Last updated on February 28, 2026 am

🧙 Questions

将A集群中的hive数据,同步到B集群中的hive中

因为chunjun的hive同步连接器,支持的功能不多,所以都采用hdfs的方式同步

☄️ Ideas

在双方服务器中的hosts配置好域名映射,一定要配置非常重要!!!

sudo vim /etc/hosts111.111.111.111 isxcode

39.98.186.96 isxcode集群A信息

fs.defaultFS: hdfs://isxcode:9000

tablePath: /user/hive/warehouse/ispong_db.db/users/

hadoop.user.name: ispong

# 一定要配置为true,非常重要!!!

dfs.client.use.datanode.hostname: true集群B信息

fs.defaultFS: hdfs://isxcode:30116

tablePath: /user/hive/warehouse/ispong_db.db/users/

hadoop.user.name: ispong

# 一定要配置为true,非常重要!!!

dfs.client.use.datanode.hostname: true运行chunjun

在B集群上运行

bash /opt/chunjun/bin/chunjun-yarn-perjob.sh -job /home/ispong/hive_to_hive.json{

"job": {

"content": [

{

"reader": {

"name" : "hdfsreader",

"parameter" : {

"path" : "/user/hive/warehouse/ispong_db.db/users/",

"defaultFS" : "hdfs://isxcode:9000",

"column": [

{

"name": "username",

"type": "string"

},

{

"name": "age",

"type": "int"

}

],

"fileType" : "text",

"fieldDelimiter": "\u0001",

"hadoopConfig":{

"dfs.client.use.datanode.hostname":"true",

"fs.defaultFS": "hdfs://isxcode:9000",

"hadoop.user.name": "ispong"

}

}

},

"writer": {

"name": "hdfswriter",

"parameter": {

"path": "/user/hive/warehouse/ispong_db.db/users/",

"fileName": "pt=180",

"defaultFS": "hdfs://isxcode:30116",

"column": [

{

"name": "username",

"type": "string"

},

{

"name": "age",

"type": "int"

}

],

"fileType": "text",

"fieldDelimiter": ",",

"writeMode": "overwrite",

"hadoopConfig":{

"dfs.client.use.datanode.hostname":"true",

"fs.defaultFS": "hdfs://isxcode:30116",

"hadoop.user.name": "ispong"

}

}

}

}

],

"setting": {

"speed": {

"channel": 1

}

}

}

}

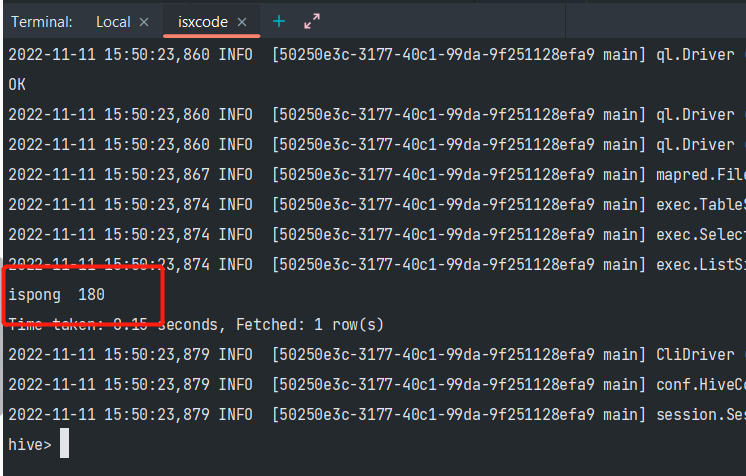

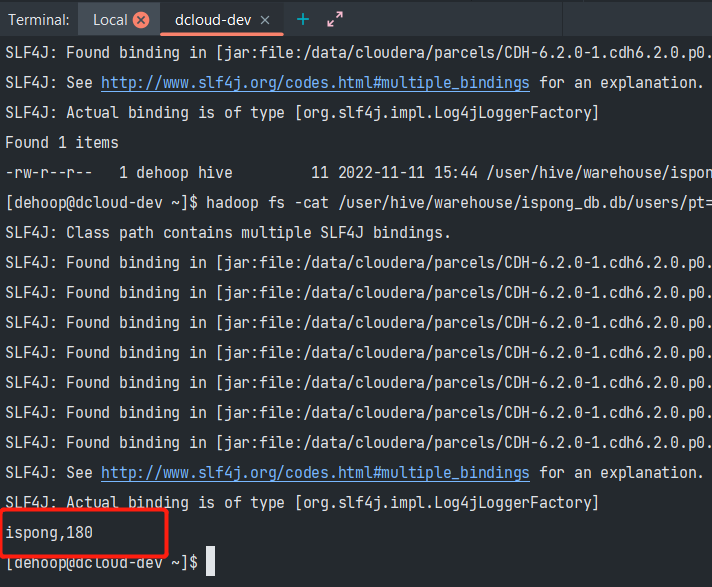

同步成功,方案可行

🔗 Links

flink chunjun跨集群hive同步

https://ispong.isxcode.com/hadoop/flink/flink chunjun跨集群hive同步/