kubernetes HA集群安装

Last updated on July 15, 2025 am

🧙 Questions

使用rke

v1.4.5版本安装kubernetes集群v1.18.10-rancher1版本

部署环境 centOS 7.9 64位

服务器个数最好是奇数,isxcode-main1和isxcode-main2为主节点,双节点多活。

isxcode-main1:controlplaneworkeretcd

isxcode-main2:controlplaneworkeretcd

isxcode-node1:workeretcd

isxcode-node2:workeretcd

isxcode-node3:workeretcd

| hostname | 公网ip | 内网ip | CPU | 内存 |

|---|---|---|---|---|

| isxcode-main1 | 39.98.65.13 | 172.16.215.101 | 8C | 16GB |

| isxcode-main2 | 39.98.218.189 | 172.16.215.100 | 8C | 16GB |

| isxcode-node1 | 39.98.210.253 | 172.16.215.97 | 4C | 8GB |

| isxcode-node2 | 39.98.213.46 | 172.16.215.96 | 4C | 8GB |

| isxcode-node3 | 39.98.210.230 | 172.16.215.95 | 4C | 8GB |

☄️ Ideas

生成ssh密钥 (每台都要执行)

一路回车

ssh-keygen配置主节点免密操作 (两个主节点执行)

ssh-copy-id ispong@isxcode-main1

ssh-copy-id ispong@isxcode-main2

ssh-copy-id ispong@isxcode-node1

ssh-copy-id ispong@isxcode-node2

ssh-copy-id ispong@isxcode-node3安装docker (每台都要安装)

参考文档 docker 安装

安装rke (一台主节点安装即可)

# 国内无法下载,需要下载后scp上传

# wget https://github.com/rancher/rke/releases/download/v1.4.5/rke_linux-amd64

scp rke_linux-amd64 ispong@39.98.65.13:~/

sudo mv rke_linux-amd64 /usr/bin/rke

sudo chmod +x /usr/bin/rke

rke -version初始化安装脚本

rke config --name cluster.yml全部写内网ip

版本号可以修改,但注意镜像仓库有才行

rancher/hyperkube:v1.25.9-rancher2-1

[ispong@isxcode-main1 ~]$ rke config --name cluster.yml

[+] Cluster Level SSH Private Key Path [~/.ssh/id_rsa]:

[+] Number of Hosts [1]: 5

[+] SSH Address of host (1) [none]: 172.16.215.101

[+] SSH Port of host (1) [22]:

[+] SSH Private Key Path of host (172.16.215.101) [none]: ~/.ssh/id_rsa

[+] SSH User of host (172.16.215.101) [ubuntu]: ispong

[+] Is host (172.16.215.101) a Control Plane host (y/n)? [y]: y

[+] Is host (172.16.215.101) a Worker host (y/n)? [n]: y

[+] Is host (172.16.215.101) an etcd host (y/n)? [n]: y

[+] Override Hostname of host (172.16.215.101) [none]:

[+] Internal IP of host (172.16.215.101) [none]:

[+] Docker socket path on host (172.16.215.101) [/var/run/docker.sock]:

[+] SSH Address of host (2) [none]: 172.16.215.100

[+] SSH Port of host (2) [22]:

[+] SSH Private Key Path of host (172.16.215.100) [none]: ~/.ssh/id_rsa

[+] SSH User of host (172.16.215.100) [ubuntu]: ispong

[+] Is host (172.16.215.100) a Control Plane host (y/n)? [y]: y

[+] Is host (172.16.215.100) a Worker host (y/n)? [n]: y

[+] Is host (172.16.215.100) an etcd host (y/n)? [n]: y

[+] Override Hostname of host (172.16.215.100) [none]:

[+] Internal IP of host (172.16.215.100) [none]:

[+] Docker socket path on host (172.16.215.100) [/var/run/docker.sock]:

[+] SSH Address of host (3) [none]: 172.16.215.97

[+] SSH Port of host (3) [22]:

[+] SSH Private Key Path of host (172.16.215.97) [none]: ~/.ssh/id_rsa

[+] SSH User of host (172.16.215.97) [ubuntu]: ispong

[+] Is host (172.16.215.97) a Control Plane host (y/n)? [y]: n

[+] Is host (172.16.215.97) a Worker host (y/n)? [n]: y

[+] Is host (172.16.215.97) an etcd host (y/n)? [n]: y

[+] Override Hostname of host (172.16.215.97) [none]:

[+] Internal IP of host (172.16.215.97) [none]:

[+] Docker socket path on host (172.16.215.97) [/var/run/docker.sock]:

[+] SSH Address of host (4) [none]: 172.16.215.96

[+] SSH Port of host (4) [22]:

[+] SSH Private Key Path of host (172.16.215.96) [none]: ~/.ssh/id_rsa

[+] SSH User of host (172.16.215.96) [ubuntu]: ispong

[+] Is host (172.16.215.96) a Control Plane host (y/n)? [y]: n

[+] Is host (172.16.215.96) a Worker host (y/n)? [n]: y

[+] Is host (172.16.215.96) an etcd host (y/n)? [n]: y

[+] Override Hostname of host (172.16.215.96) [none]:

[+] Internal IP of host (172.16.215.96) [none]:

[+] Docker socket path on host (172.16.215.96) [/var/run/docker.sock]:

[+] SSH Address of host (5) [none]: 72.16.215.95

[+] SSH Port of host (5) [22]:

[+] SSH Private Key Path of host (72.16.215.95) [none]: ~/.ssh/id_rsa

[+] SSH User of host (72.16.215.95) [ubuntu]: ispong

[+] Is host (72.16.215.95) a Control Plane host (y/n)? [y]: n

[+] Is host (72.16.215.95) a Worker host (y/n)? [n]: y

[+] Is host (72.16.215.95) an etcd host (y/n)? [n]: y

[+] Override Hostname of host (72.16.215.95) [none]:

[+] Internal IP of host (72.16.215.95) [none]:

[+] Docker socket path on host (72.16.215.95) [/var/run/docker.sock]:

[+] Network Plugin Type (flannel, calico, weave, canal) [canal]:

[+] Authentication Strategy [x509]:

[+] Authorization Mode (rbac, none) [rbac]:

[+] Kubernetes Docker image [rancher/hyperkube:v1.18.10-rancher1]:

[+] Cluster domain [cluster.local]:

[+] Service Cluster IP Range [10.43.0.0/16]:

[+] Enable PodSecurityPolicy [n]:

[+] Cluster Network CIDR [10.42.0.0/16]:

[+] Cluster DNS Service IP [10.43.0.10]:

[+] Add addon manifest URLs or YAML files [no]:修改配置文件支持HA

vim cluster.ymlingress:

provider: nginx # 高可用配置

options: {

use-forwarded-headers: "true" # 高可用配置

}

services:

etcd:

snapshot: true

creation: 6h

retention: 24hnodes:

- address: 172.16.215.101

port: "22"

internal_address: ""

role:

- controlplane

- worker

- etcd

hostname_override: ""

user: ispong

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

taints: []

labels:

app: ingress

- address: 172.16.215.100

port: "22"

internal_address: ""

role:

- controlplane

- worker

- etcd

hostname_override: ""

user: ispong

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

taints: []

labels:

app: ingress

- address: 172.16.215.97

port: "22"

internal_address: ""

role:

- worker

- etcd

hostname_override: ""

user: ispong

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: 172.16.215.96

port: "22"

internal_address: ""

role:

- worker

- etcd

hostname_override: ""

user: ispong

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: 172.16.215.95

port: "22"

internal_address: ""

role:

- worker

- etcd

hostname_override: ""

user: ispong

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

services:

etcd:

image: ""

extra_args: {

}

extra_binds:

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

external_urls: []

ca_cert: ""

cert: ""

key: ""

path: ""

uid: 0

gid: 0

snapshot: true

retention: 24h

creation: 6h

backup_config: null

kube-api:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

service_cluster_ip_range: 10.43.0.0/16

service_node_port_range: ""

pod_security_policy: false

always_pull_images: false

secrets_encryption_config: null

audit_log: null

admission_configuration: null

event_rate_limit: null

kube-controller:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

cluster_cidr: 10.42.0.0/16

service_cluster_ip_range: 10.43.0.0/16

scheduler:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

kubelet:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

cluster_domain: cluster.local

infra_container_image: ""

cluster_dns_server: 10.43.0.10

fail_swap_on: false

generate_serving_certificate: false

kubeproxy:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

network:

plugin: canal

options: {}

mtu: 0

node_selector: {}

update_strategy: null

authentication:

strategy: x509

sans: []

webhook: null

addons: ""

addons_include: []

system_images:

etcd: rancher/coreos-etcd:v3.4.3-rancher1

alpine: rancher/rke-tools:v0.1.66

nginx_proxy: rancher/rke-tools:v0.1.66

cert_downloader: rancher/rke-tools:v0.1.66

kubernetes_services_sidecar: rancher/rke-tools:v0.1.66

kubedns: rancher/k8s-dns-kube-dns:1.15.2

dnsmasq: rancher/k8s-dns-dnsmasq-nanny:1.15.2

kubedns_sidecar: rancher/k8s-dns-sidecar:1.15.2

kubedns_autoscaler: rancher/cluster-proportional-autoscaler:1.7.1

coredns: rancher/coredns-coredns:1.6.9

coredns_autoscaler: rancher/cluster-proportional-autoscaler:1.7.1

nodelocal: rancher/k8s-dns-node-cache:1.15.7

kubernetes: rancher/hyperkube:v1.18.10-rancher1

flannel: rancher/coreos-flannel:v0.12.0

flannel_cni: rancher/flannel-cni:v0.3.0-rancher6

calico_node: rancher/calico-node:v3.13.4

calico_cni: rancher/calico-cni:v3.13.4

calico_controllers: rancher/calico-kube-controllers:v3.13.4

calico_ctl: rancher/calico-ctl:v3.13.4

calico_flexvol: rancher/calico-pod2daemon-flexvol:v3.13.4

canal_node: rancher/calico-node:v3.13.4

canal_cni: rancher/calico-cni:v3.13.4

canal_flannel: rancher/coreos-flannel:v0.12.0

canal_flexvol: rancher/calico-pod2daemon-flexvol:v3.13.4

weave_node: weaveworks/weave-kube:2.6.4

weave_cni: weaveworks/weave-npc:2.6.4

pod_infra_container: rancher/pause:3.1

ingress: rancher/nginx-ingress-controller:nginx-0.35.0-rancher2

ingress_backend: rancher/nginx-ingress-controller-defaultbackend:1.5-rancher1

metrics_server: rancher/metrics-server:v0.3.6

windows_pod_infra_container: rancher/kubelet-pause:v0.1.4

ssh_key_path: ~/.ssh/id_rsa

ssh_cert_path: ""

ssh_agent_auth: false

authorization:

mode: rbac

options: {}

ignore_docker_version: null

kubernetes_version: ""

private_registries: []

ingress:

provider: nginx

options: {

use-forwarded-headers: "true"

}

node_selector:

app: ingress

extra_args: {

http-port: 8080,

https-port: 8443

}

dns_policy: ""

extra_envs: []

extra_volumes: []

extra_volume_mounts: []

update_strategy: null

http_port: 9090

https_port: 9443

network_mode: hostPort

cluster_name: ""

cloud_provider:

name: ""

prefix_path: ""

win_prefix_path: ""

addon_job_timeout: 0

bastion_host:

address: ""

port: ""

user: ""

ssh_key: ""

ssh_key_path: ""

ssh_cert: ""

ssh_cert_path: ""

monitoring:

provider: ""

options: {}

node_selector: {}

update_strategy: null

replicas: null

restore:

restore: false

snapshot_name: ""

dns: null创建etcd文件夹

所有etcd的服务器都要执行

注意:如需自定义路径,请在安装前,在cluster.yml中配置

默认路径:/var/lib/rancher/etcd/

sudo mkdir -p /var/lib/rancher/etcd/

sudo chown -R ispong:ispong /var/lib/rancher/etcd/降低docker版本 (根据情况可选)

不支持比较新的docker版本

FATA[0258] Unsupported Docker version found [24.0.2] on host [172.16.215.101], supported versions are [1.13.x 17.03.x 17.06.x 17.09.x 18.06.x 18.09.x 19.03.x]- 卸载docker重新安装

sudo systemctl stop docker

sudo yum list installed | grep docker

sudo yum remove -y containerd.io.x86_64 docker-buildx-plugin.x86_64 docker-ce.x86_64 docker-ce-cli.x86_64 docker-ce-rootless-extras.x86_64 docker-compose-plugin.x86_64

sudo yum -y install docker-ce-19.03.9-3.el7 docker-ce-cli-19.03.9-3.el7 containerd.io

sudo systemctl start docker

sudo systemctl status docker

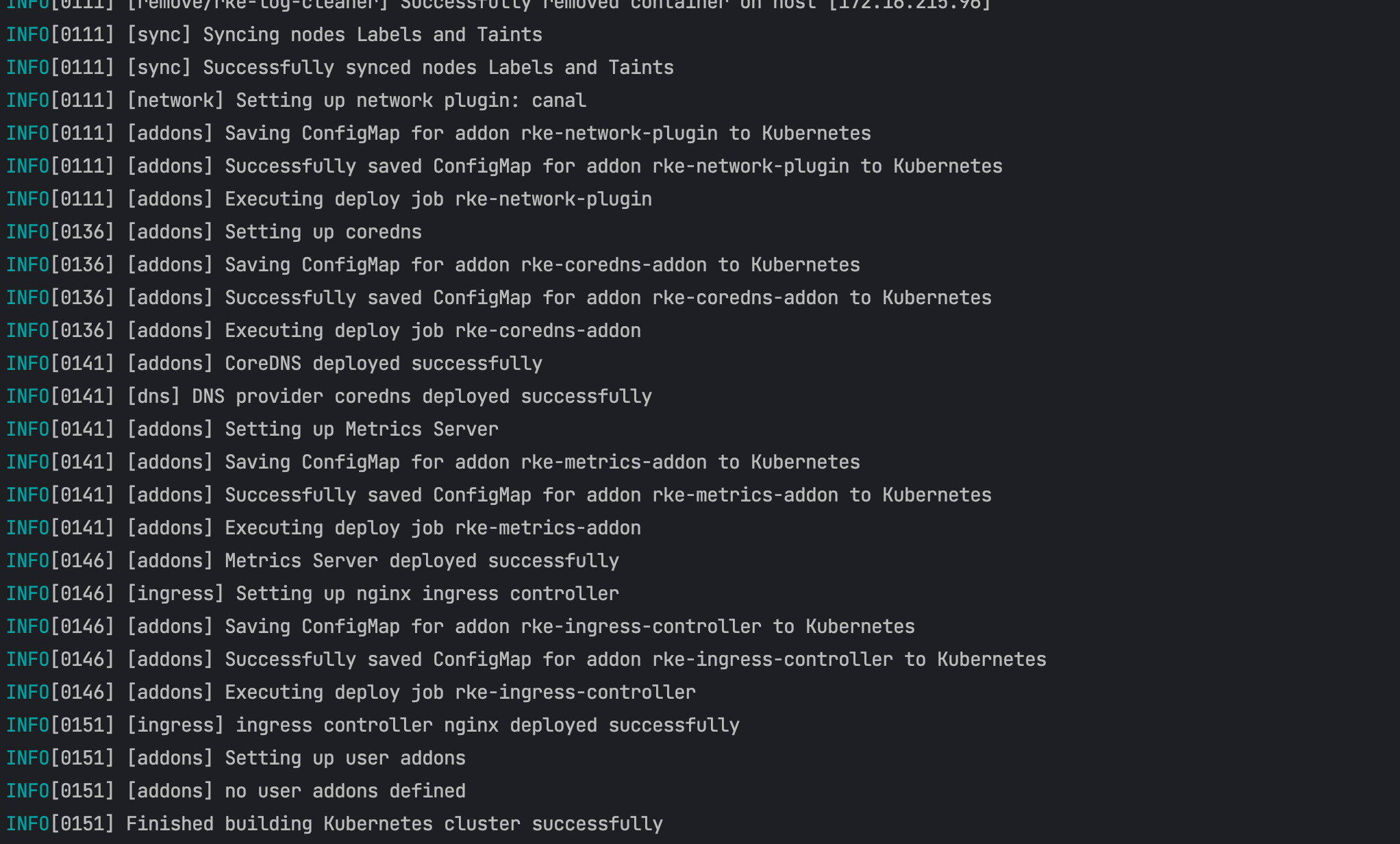

docker -v安装kubernetes

nohup rke up --config cluster.yml >> install_k8s.log 2>&1 &

tail -f install_k8s.log

安装kubectl

sudo vim /etc/yum.repos.d/kubernetes.repo[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgsudo yum makecache fast

sudo yum install -y kubectlkubectl --kubeconfig kube_config_cluster.yml create clusterrolebinding ingress-admin --clusterrole=cluster-admin --serviceaccount=kube-system:default将安装好后生成的

kube_config_cluster.yml文件,复制到~/.kube/config目录下

sudo mv kube_config_cluster.yml /etc/

sudo vim /etc/profile

export KUBECONFIG=/etc/kube_config_cluster.yml

source /etc/profilescp /etc/kube_config_cluster.yml ispong@isxcode-main2:~/kube_config_cluster.yml

scp /etc/kube_config_cluster.yml ispong@isxcode-node1:~/kube_config_cluster.yml

scp /etc/kube_config_cluster.yml ispong@isxcode-node2:~/kube_config_cluster.yml

scp /etc/kube_config_cluster.yml ispong@isxcode-node3:~/kube_config_cluster.yml检测k8s安装

kubectl get nodes

kubectl get pods -n kube-system

kubectl get pods --all-namespaces

kubectl get ingress

kubectl get service -n ingress-nginx

kubectl get pod -o wide

kubectl exec nginx-ingress-controller-7z4wn -it cat /etc/nginx/nginx.conf -n ingress-nginx

kubectl get service -n ingress-nginx

default-http-backend ClusterIP 10.43.253.158 <none> 80/TCP 21h

10.43.253.158 ispong.k8s.com

kubectl get svc -n ingress-nginx[ispong@isxcode-main1 ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

172.16.215.100 Ready controlplane,etcd,worker 14m v1.18.10

172.16.215.101 Ready controlplane,etcd,worker 14m v1.18.10

172.16.215.95 Ready etcd,worker 14m v1.18.10

172.16.215.96 Ready etcd,worker 14m v1.18.10

172.16.215.97 Ready etcd,worker 14m v1.18.10🔗 Links

kubernetes HA集群安装

https://ispong.isxcode.com/kubernetes/kubernetes/kubernetes HA集群安装/